Ongoing Research Case Study:

How a Monthly Live User Research Program Drove a User-Centered Culture

A Fortune 500 company was eager to scale user research and human-centered design across the enterprise. Here’s how they successfully launched a recurring research lab, “Live Testing Tuesday.”

From Unmoderated to Moderated Research

The hard part was over: we had helped the enterprise financial services company to embrace user-centered design principles to the point that researchers had a steady stream of work requests coming from multiple product teams. Now, they needed a way to manage and systematize the work.

* * *

When we started working with the company 5 years earlier, they were not actively conducting user research but were research-curious. We worked with them to design and implement a practice of conducting remote unmoderated testing via UserTesting.com.

The research was solid and made an impact in the product direction, and the designers were thrilled to finally have an opportunity to test and validate their assumptions. That said, the limitations of the methodologies were noticeable. Unmoderated testing is valuable to a point, but it lacks the ability to ask the probing follow-up questions necessary to uncover the root causes of problems.

So we pushed them further. We worked with them to establish a practice of conducting remote moderated research. We trained them to facilitate 1:1 sessions and to analyze recordings. We helped them expand beyond usability testing into new methods and tools like card sorts, field studies, and journey maps. Now, they were truly getting to know their users firsthand.

From Expert-Led to Collaborative Analysis

Still, the biggest impacts of the research were felt only by those researchers conducting the sessions. Product owners and other stakeholders couldn’t enjoy the same user exposure simply by reading a report or watching a short video clip. It wouldn’t be enough to change the design culture of the organization.

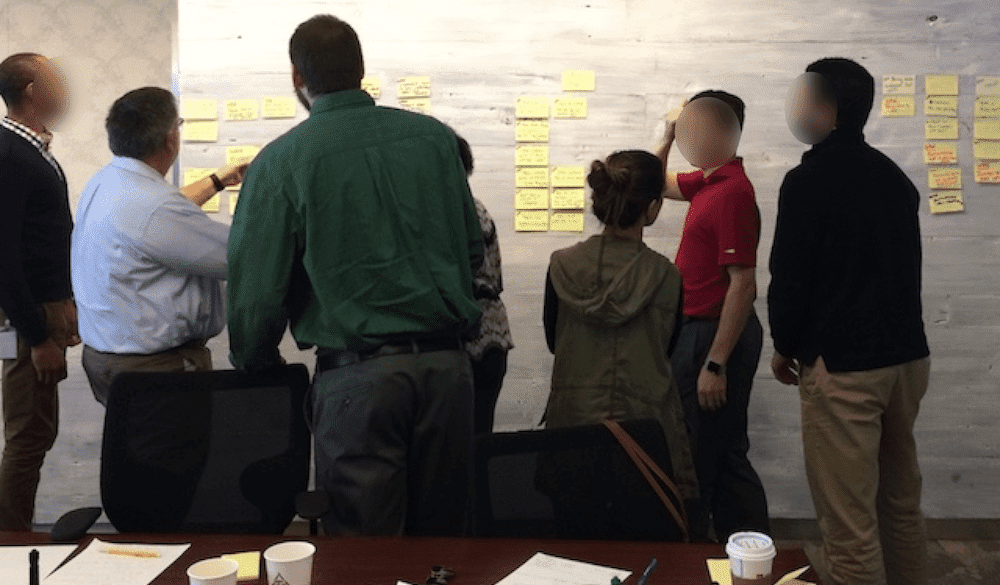

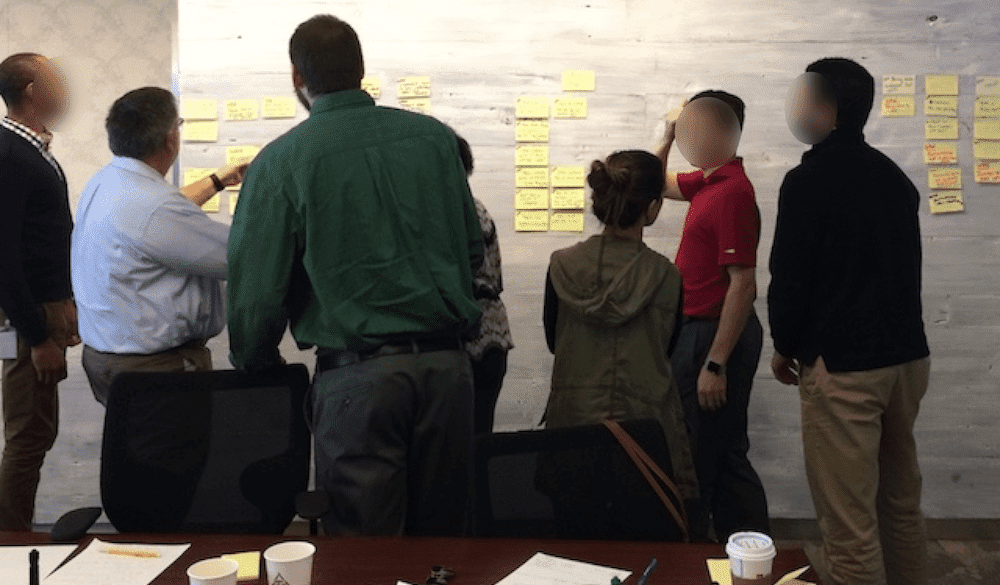

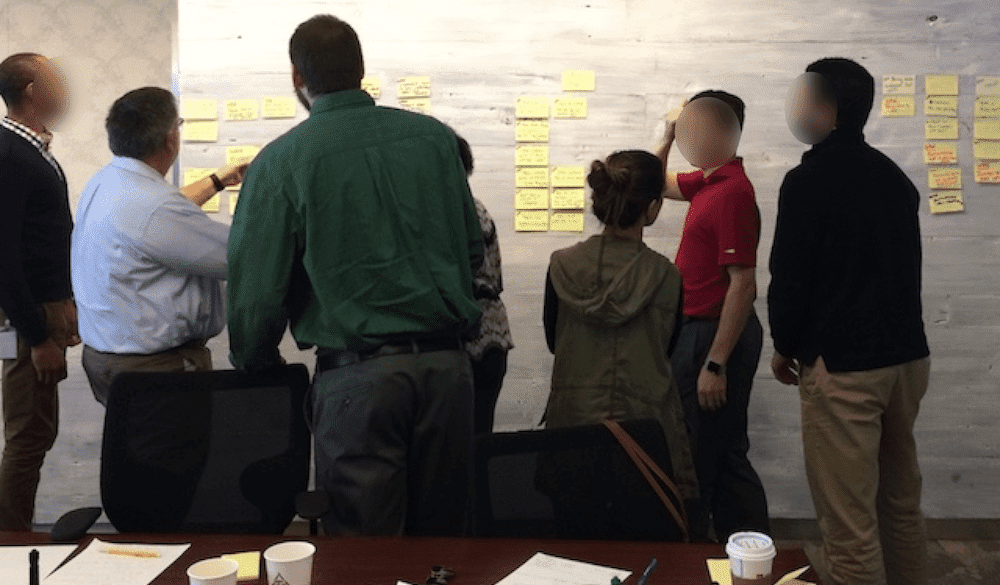

So we took an additional step: we democratized the analysis process. Instead of having researchers analyze recordings themself and simply disseminate a report, we moved to a model of workshop-based collaborative analysis.

After the remote sessions had been moderated and recorded, we convened a workshop where all of the key stakeholders, from directors to engineers, would gather in a room to watch all of the recordings and take notes. Later in the day, we facilitated a structured process to synthesize the qualitative data, elevate the most critical issues uncovered, and brainstorm and prioritize solutions.

The process was a huge hit. Suddenly, stakeholders no longer needed to take the researcher’s word for it. They were witnessing the sessions — and the customer pain points — first hand.

When a research report recommended a dramatic change, they had a hand in making that recommendation. Suddenly, product owners were requesting research, rather than researchers seeking permission to conduct a study. Finally, we were seeing the shift towards a user-centered culture.

Now, we wanted to scale it.

The Vision: Monthly Live Research

We had first pitched the idea a year earlier: monthly live research, observed in the morning by product teams and followed by an afternoon analysis workshop.

The vision was similar to what we’d already been doing, but had some notable innovations:

- The research would be “live”. Rather than spend the week prior to the workshop gradually conducting and recording a handful of sessions, we’d condense the research.

- We’d recruit 5 research participants to join us in the company’s offices on a single morning for back-to-back 1:1 sessions.

- A researcher and the participant would be in a conference room — our “research lab.”

- A team of stakeholders would be observing a remote feed from a nearby conference room.

- After a lunch break, we’d spend the afternoon conducting the sort of workshop that they had already developed an appetite for.

- And this would happen once a month on the same day each month, e.g. the first Tuesday of every month.

We had discussed it with our UX team partners at the company and they were excited to try it. They included it in their annual plan.

But when the plan went in front of senior management, that program got cut.

“Live Testing Tuesday”

A year later, our partners asked us to try again. “Just resend us that program description from last year and we’ll reuse it,” they said.

We did, but with one small change: we gave the program a name. We’d noticed over that year that senior managers at this client responded better to other corporate programs and initiatives when they had a name. The catchier the name, the better.

We put our marketing hats on and came up with “Live Testing Tuesday.”

A few weeks later, the program was approved.

It was a long journey from program conceptualization to reaching a place of relative stability. Here were the 3 key stages:

Stage 1: Pilot

The first thing we needed to do was to align on goals. What was the vision for the project? What problems was it intended to solve? What was it meant to accomplish that hadn’t been achieved by the previous user research efforts? We held a number of stakeholder interviews across multiple levels of leadership to ensure we had a clear vision and understanding.

Next, we needed a proof of concept. We had to show that we could plan and conduct a full research study and workshop within a month. While it was easy to focus on how much needed to be crammed into a single day of on-site research and analysis, it was just as challenging to fit all of the prep work into the month preceding that day.

We drafted several possible calendars outlining the schedule. We negotiated back and forth with the client, as some tasks would need to be completed by us, some by them, and some by both. We needed to account for time for handoffs, for peer reviews and revision, and for inevitable delays.

When we finally established an initial target schedule that all parties were happy with, we needed to identify a research project to use as a pilot. This was actually the easy part. The research backlog was full, so we had our pick of projects that would be sufficiently juicy to dig into.

Before kicking off the project, however, we needed to solidify “the team.” Given that the research and analysis would be collaborative and workshop-based, getting the right people in the room was essential. Our standard advice is to pick between 7 and 12 people and to try to get as much diversity as you can. This means not doubling up on departments. One engineer, one designer, etc. It also means trying to get a range of levels, from individual contributors to senior directors.

Ultimately, our pilot run was successful, but it certainly left us with plenty of opportunities to learn and improve in the future.

Stage 2: Iteration

It was critical that we not cement our process too early. We knew enough to know there were kinks to work out, so we gave ourselves room to iterate. Some examples of changes and enhancements:

- Multiple iterations to the monthly schedule before landing on one that stuck.

- The addition of a “Day Zero call,” a meeting prior to Live Testing Tuesday to set expectations with the team and go over some preliminary materials, enabling us to hit the ground running on the day of.

- Establishing a “participant wrangler”, a person whose sole responsibility is to greet participants, guide them through security, handle consent forms and payments, etc.

- Continuously refining protocols for recruiting, screening, and scheduling.

- Solidifying templates for participant communication, etc.

In addition, following every Live Testing Tuesday, we distributed feedback forms to the team to learn what could have been improved from their perspectives. We also held a postmortem following each workshop to discuss the feedback received and agree on any necessary changes.

As we gradually began to settle on more established routines and protocols, we started the process of documentation. This would prove critical for the final stage.

Stage 3: Handoff

Part of the original vision for the Live Testing Tuesday program was to establish a practice that could outlive our involvement as an agency partner. We would help to design and kick off the program, but eventually, we would hand the keys over to the client. As we started to accumulate various pieces of documentation for different parts of the process and program, we began to build a single playbook. The goal of the playbook is that anyone could conceivably pick it up and have what they needed to conduct their own Live Testing Tuesday.

Once the playbook was completed, it was tempting to codify it, export it as a beautiful PDF, or even print it out and let it exist eternally as a guide written in stone. But this would have been counterproductive. While we felt good at the time about the processes we had established, we knew that they would continue to change and evolve over time. It was necessary to allow the playbook to continue as a collaborative, living document, to be constantly updated as the program continued to shift. And it remains in that forever incomplete state to this day.

* * *

It’s exciting to think back on how far this client has evolved in its UX maturity over the past 10 years.

From an organization tentatively trying out remote unmoderated testing to a team regularly inviting participants into their offices and facilitating collaborative analysis workshops, it has been a long journey and Marketade is honored to have been a part of it.

More Case Studies

How a Top-5 Ad Agency Brought UX Research to Top Clients

Epsilon works with some of the largest banks and pharma companies in the world. Here are highlights from some of the projects we’ve teamed up on.

How PetSmart Charities Combined Usability and A/B Testing to Increase a Signup Rate from 27% to 71%

A national nonprofit embraces a research cycle with qualitative and quantitative testing — and achieves a 167% lift on its top KPI.